TL;DR: MLOps is the practice of streamlining the deployment, monitoring, and management of machine learning models in production. This article breaks down why traditional software practices don't work for ML, the core components of effective MLOps, and practical first steps for organizations looking to improve their ML deployment capabilities.

Welcome to the first edition of »IterAI! I'm excited to kick things off by diving into MLOps - the crucial but often overlooked bridge between creating machine learning models and actually delivering value with them in the real world.

The Problem: Models That Never See Daylight

You might recognize this scenario: A data science team spends months building an impressive machine learning model. It shows great results in the lab. Everyone's excited. Then... nothing happens. Months later, the model still isn't in production, or worse, it's deployed but performing poorly in the real world.

This gap between development and production isn't just frustrating—it's expensive. According to Gartner, only about 35% of analytics projects ever make it to production. For machine learning specifically, that number can be even lower.

Why Traditional DevOps Isn't Enough

If you come from a software engineering background, you might wonder: "Why can't we just use our existing DevOps practices?"

The answer lies in fundamental differences between traditional software and machine learning systems:

Code + Data = Model: In ML, your system behavior is determined not just by code but by data. This means versioning, testing, and deployment all need to handle both.

Non-deterministic Outputs: Traditional software produces the same output given the same input every time. ML models can produce different results as they learn or encounter edge cases.

Hidden Technical Debt: ML systems have complex, sometimes invisible dependencies that can create unexpected failure modes.

Decay Over Time: Unlike traditional software that works until changed, ML models naturally degrade as the world they model changes (this is called "drift").

The Core Components of Effective MLOps

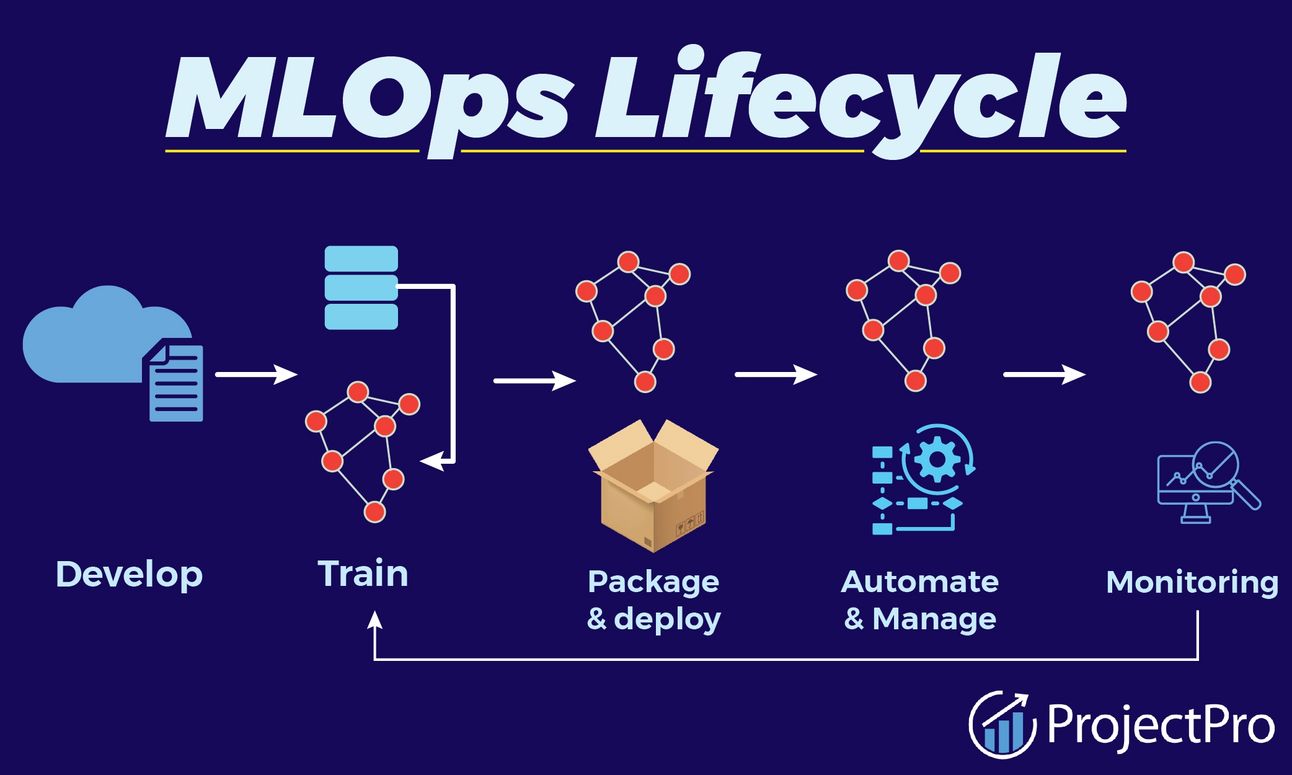

At its heart, MLOps aims to bring rigor and automation to the ML lifecycle. Here are the key components:

1. Reproducible Model Training

Versioning for code, data, and model artifacts

Consistent environments across development and production

Automated, parameterized training pipelines

2. Continuous Integration/Continuous Delivery

Automated testing of models before deployment

Standardized model packaging

Deployment automation with rollback capabilities

3. Model Serving Infrastructure

Scalable inference endpoints

Performance monitoring

Resource management

4. Monitoring and Observability

Data drift detection

Model performance tracking

Business impact metrics

5. Feedback Loops

A/B testing infrastructure

Automated retraining triggers

Feature store for consistent feature engineering

Maturity Levels: Where Are You?

Organizations typically progress through several levels of MLOps maturity:

Level 0: Manual Process

Data scientists manually deploy models, little automation, no monitoring.

1Level 1: ML Pipeline Automation

Automated training, but manual deployment and minimal monitoring.

Level 2: CI/CD for ML

Automated deployment, testing, and basic monitoring.

Level 3: Automated Operations

Full automation including drift detection, retraining, and advanced experimentation.

Most organizations today are between levels 0 and 1, with very few reaching level 3.

First Steps: Where to Begin

If you're looking to improve your MLOps capabilities, here are practical starting points:

Document your current ML workflow - Identify manual steps and bottlenecks

Implement version control for everything - Not just code, but data, parameters, and environments

Standardize your model packaging - Create a consistent structure for deploying models

Add basic monitoring - Start with simple performance metrics and alerts

Define clear ownership - Clarify who's responsible for models in production

Real-World Example: From 6 Months to 2 Weeks

A retail company I worked with reduced their model deployment time from 6 months to 2 weeks by implementing basic MLOps practices. Their first step? Creating a standardized Docker container for model deployment that data scientists could use without involving engineering teams for each deployment.

Quick Bytes

Tool Worth Exploring: MLflow is an open-source platform for the ML lifecycle that helps track experiments, package code, and deploy models.

Paper to Read: "Hidden Technical Debt in Machine Learning Systems" by Google researchers remains essential reading for understanding why ML systems are uniquely challenging.

Quote to Consider: "The goal of MLOps isn't more models in production; it's more business value from models in production." - This mindset shift is crucial for successful implementation.

That's it for our first issue! Next week, we'll explore "Key MLOps Tools in 2025" and examine the free and open-source options that can transform your ML workflow.

Have thoughts or questions? Hit reply - I read every response personally.

Until next week,

IterAI

P.S. If you found this valuable, please share it with colleagues who might be struggling with the ML-to-production gap.